Exciting Developments in AI: Google Gemma 2 Launch and More

Written on

Chapter 1: Introduction to the Week's AI Highlights

Greetings, AI Enthusiasts!

Welcome to this week's edition of AI News, where we curate the most thrilling updates in the realm of AI and technology. Our goal is to keep you informed and inspired! Let’s dive into this week's top stories.

Section 1.1: Google Launches Gemma 2: A New Era in Open Source

Google has officially unveiled its open-source model, Gemma 2, now accessible for developers. This new model represents a collection of lightweight, cutting-edge open models built on the same foundation as Google’s Gemini models.

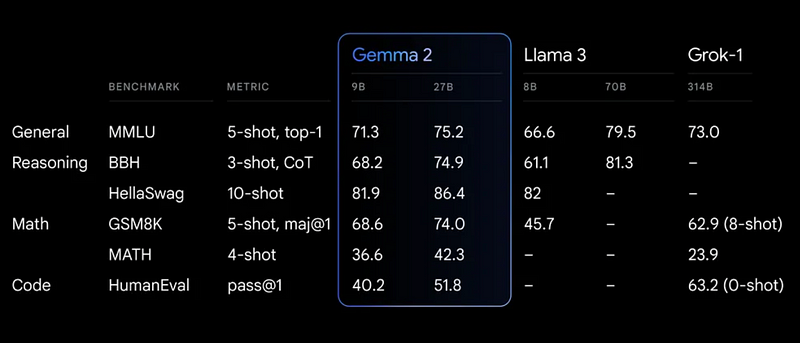

Gemma 2 is offered in two configurations, with 9 billion and 27 billion parameters, alongside a 2.6 billion parameter model. Impressively, the 27 billion parameter version delivers performance comparable to models that exceed its size by more than double. Google claims that the 9 billion parameter model surpasses similar offerings like Llama 3 8B.

Our Perspective

The launch of robust and competitive open-source large language models (LLMs) by Google is promising. Notably, these models can operate on a single NVIDIA H100 Tensor Core GPU or TPU host, which significantly lowers deployment costs. We anticipate that this model will gain considerable traction within the open-source community. Gemma 2 is currently available on HuggingFace.

More information can be found in the Google Blog.

Description: This video critiques Google’s new open-source model, discussing its limitations and performance issues.

Section 1.2: OpenAI's CriticGPT: Enhancing ChatGPT's Code Output

OpenAI has introduced CriticGPT, an AI model based on GPT-4, aimed at identifying errors in the code output generated by ChatGPT. According to their blog, users employing both tools for coding see a 60% improvement in performance compared to those using only ChatGPT. CriticGPT provides suggestions when errors are detected and solicits user feedback, which is utilized to refine ChatGPT.

While CriticGPT does not eliminate the issue of hallucinations, it is designed to improve the accuracy of responses. However, OpenAI has acknowledged some current limitations. The model has been trained primarily on shorter responses from ChatGPT, indicating that future training will need to encompass more complex outputs. Additionally, the intricate nature of some errors in responses must also be addressed.

Our Perspective

Despite the progress made, LLMs continue to face challenges, particularly in critical applications due to potential inaccuracies. We appreciate OpenAI's advancements, yet recognize there is still much work to be done to achieve near-perfect LLM functionality.

More information can be found in the OpenAI Blog.

Description: This video evaluates the strengths of Google's new model while pointing out one significant drawback.

Chapter 2: Innovations in AI: Efficient Language Models

Section 2.1: Breakthroughs Without Matrix Multiplication

Researchers from the USA and China have developed innovative language models that do not rely on memory-heavy matrix multiplications. Their findings suggest these models can compete with contemporary transformers, with matrix multiplications being a significant contributor to resource demands that affect model scalability.

The authors provide a GPU-efficient implementation of their MatMul-free model, which lowers memory usage during training by up to 61% when compared to a non-optimized baseline. An optimized kernel during inference can reduce memory consumption by over ten times compared to traditional models.

For those interested in a more detailed exploration, we recommend reviewing the full paper. The authors have also made the code available on GitHub.

Our Perspective

Matrix multiplications (MatMul) contribute substantially to the computational costs associated with large language models (LLMs). Training these models requires vast energy resources. Thus, finding more efficient methodologies is crucial to balancing AI development with environmental considerations.

More information can be found in the ArXiv paper and the GitHub repository.

Articles of the Week

If you're interested, you might also enjoy these blog posts:

- Mistral’s Codestral: Create a local AI Coding Assistant for VSCode

- 7 Hidden Python Tips for 2024

- Responsible Development of an LLM Application + Best Practices

Thank you for reading, and we look forward to connecting with you again soon!

- Tinz Twins

If you enjoyed our content and wish to dive deeper into AI topics, consider subscribing to our Tinz Twins Hub for exclusive articles and a free data science ebook. Don't forget to follow us on X!